Top Qs

Timeline

Chat

Perspective

Bernoulli's method

Polynomial root-finding algorithm From Wikipedia, the free encyclopedia

Remove ads

In numerical analysis, Bernoulli's method, named after Daniel Bernoulli, is a root-finding algorithm which calculates the root of largest absolute value of a univariate polynomial.[2][3] The method works under the condition that there is only one root (possibly multiple) of maximal absolute value. The method computes the root of maximal absolute value as the limit of the quotients of two successive terms of a sequence defined by a linear recurrence whose coefficients are those of the polynomial.

Since the method converges with a linear order only, it is less efficient than other methods, such as Newton's method. However, it can be useful for finding an initial guess ensuring that these other methods converge to the root of maximal absolute value.[4] Bernoulli's method holds historical significance as an early approach to numerical root-finding and provides an elegant connection between recurrence relations and polynomial roots.

Remove ads

History

Bernoulli's method was first introduced by Swiss-French mathematician and physicist Daniel Bernoulli (1700-1782) in 1728.[1][6] He noticed a trend from recurrent series created using polynomial coefficients growing by a ratio related to a root of the polynomial but did not prove why it worked.[6] In 1725, Bernoulli moved to Saint Petersburg with his brother Nicolaus II Bernoulli, who unfortunately died of fever in 1726.[7] While there, he worked closely with Leonhard Euler, a student of Johann Bernoulli, and made many advancements in harmonics, mathematical economics (see St. Petersburg paradox), and hydrodynamics.[7] Euler called Bernoulli's method "frequently very useful" and gave a justification for why it works in 1748.[8][3] The mathematician Joseph-Louis Lagrange expanded on this for the case of multiple roots in 1798.[5][3] Bernoulli's method predates other root-finding algorithms like Graeffe's method (1826 to Dandelin)[9][10] and is contemporary to Halley's method (1694).[11][12] Since then, it has influenced the development of more modern algorithms such as the QD method.[13][14]

Remove ads

The method

Summarize

Perspective

Given a polynomial of degree d with complex coefficients. Choose d starting values that are usually .[15] Then, consider the sequence defined by the recurrence relation[2]

Let be the ratio of successive terms of the sequence. If there is only one complex root of maximal absolute value, then the sequence of the has a limit that is this root.[16]

If the coefficients of the polynomial are real then, via the complex conjugate root theorem, each of the polynomial's roots must be either a real number or part of a complex conjugate pair. Therefore, if the polynomial contains a single dominant complex root, then the coefficients must include a complex number, and so the sequence generated using the coefficients will contain complex numbers. Bernoulli's method will work to find a single dominant root, regardless of whether it is real or complex.[2] If a root is part of a complex conjugate pair, then each root in the pair has the same maximal absolute value, and a modified form of Bernoulli's method is needed to calculate them.[3]

Remove ads

Derivation of the method

Summarize

Perspective

The solutions of the n-th order difference equation

have the form

where the are the distinct complex roots of p, and is polynomial in m of degree less than the multiplicity of . For simple roots, is a constant. The coefficients can be determined from the first d terms of the sequence by solving a linear system of equations. This system has always a unique solution since its matrix is a Vandermonde matrix if the roots are simple, or a confluent Vandermonde matrix otherwise.[17]

The quotient of two successive terms of the sequence is

Factoring out gives

Assuming is the dominant root, such that for , each ratio has an absolute value less than 1. Thus as m increases, approaches zero, so even for non-constant . Hence the limit of the fraction is the same as that of which is 1 if is a constant or a nonzero polynomial in m.

Hence in all cases where there is only one root of maximal absolute value.[18]

This assumes which is satisfied by using initial values of all zeros followed by a final 1.[15] Indeed, Cramer's rule implies that is a signed quotient of two nonsingular Vandermonde matrices, if all roots are simple; in the case of multiple roots, the dominant coefficient of is a signed quotient of two nonsingular confluent matrices.

Remove ads

Extensions

Summarize

Perspective

Bernoulli's method converges to the root of largest modulus of a polynomial with a linear order of convergence.[3] It does not converge when there are two distinct complex roots of the same largest modulus, but there are extensions of the method that work in this case.[2] For finding the root of smallest absolute value, one can apply the method on the reciprocal polynomial (polynomial obtained by reversing the order of the coefficients), and inverting the result. When using root deflation with something like Horner's method, deflating from the smallest root is more stable.[20]

To speed convergence, Alexander Aitken developed his Aitken delta-squared process as part of an improvement on his extension to Bernoulli's method, which also found all of the roots simultaneously.[19][21] Another extension of Bernoulli's method is the Quotient-Difference (QD) method, which also finds all roots simultaneously, even though it can be unstable.[3] Given the slow convergence of Bernoulli's method, and the instability of QD method, they can instead be used as reliable ways to find starting values for other root-finding algorithms, rather than iterated until tolerance.[22]

Remove ads

Example

Summarize

Perspective

The following example illustrates Bernoulli's method applied to a quadratic polynomial. Let . Then , , and , so the recurrence becomes:

Using the recommended initial values , generates the following table:

This eventually converges on , also known as the Golden ratio, which is the largest root of the example polynomial. The sequence is also the well-known Fibonacci sequence. Bernoulli's method works even if the sequence used different starting values instead of 0 and 1; the limit of the quotient remains the same.

The example also shows the absolute error approaching zero as the sequence continues. It is then possible to calculate the order of convergence using three contiguous errors. This example demonstrates that Bernoulli's method converged linearly as it approaches the dominant root of the polynomial.

Remove ads

Comparison with other methods

Summarize

Perspective

Compared to other root-finding algorithms, Bernoulli's method offers distinct advantages and limitations. The following table summarizes several important differences of Bernoulli's method in comparison with other methods:

Advantages

- No initial guess: Newton's method, Secant method, Halley's method, and other similar approaches, all require one or more starting values.[23] Bernoulli's method requires only the polynomial coefficients, eliminating the need for an initial guess.

- No derivatives: Although derivatives of polynomials are straightforward with the power rule, this is a computation that is not required in Bernoulli's method.

- Naturally finds a dominant root: Normally, finding large roots is considered less stable, but substituting z in p with , which reverses the order of coefficients, and then inverting the result of Bernoulli's method gives the smallest root of p, which is more stable.[8][20]

Limitations

- Slow convergence: Fröberg writes "As a rule, Bernoulli's method converges slowly, so instead, one ought to use, for example, the Newton-Raphson method."[24] This is in contrast to Jennings, who writes "The approximate zeros obtained by the Bernoulli method can be further improved by applying, say, the Newton-Raphson method".[4] One author argues for instead-of while the other promotes in-conjunction-with, due to the linear order of convergence. It is important to note that the method's slow convergence can be improved with Aitken's delta-squared process.[19]

- Finds one root at a time: The standard version of Bernoulli's method finds a single root, requiring deflation to find another. When compared to algorithms such as Durand–Kerner method, Aberth method, Bairstow's method, and the "RPOLY" version of Jenkins–Traub algorithm they find multiple roots by default. One can overcome this limitation by applying an extension of Bernoulli's method such as the method by Aitken or QD method.[25]

- Issues with multiples: Multiplicity and multiple dominant roots, such as conjugate pairs, can exacerbate the slowness of Bernoulli's method, yet improvements can be made to counter this.[26][27]

Remove ads

Modern applications

Summarize

Perspective

Bernoulli's method, despite its linear convergence, remains relevant in computational mathematics with finding initial values for Polynomial root-finding algorithms and extensions to more general mathematical domains.[4] It can also be used to find complex roots[2] yet the more sophisticated extensions of Bernoulli's method, such as the one by Aitken[19] and QD method,[2] are able to find complex roots while solving for all of the roots simultaneously. There are also variations on Bernoulli's method that improve stability and handle multiple roots.[26][27] A 2025 analysis of the QD method included an implementation in C.[28]

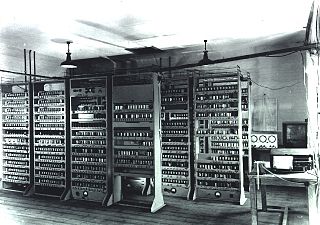

In related applications, Bernoulli's method has been shown to be equivalent to Power method on a companion matrix for finding eigenvalues.[29] Advancements in systolic arrays have led to a parallelized version of Bernoulli's method.[30] The method has also been generalized to find poles of rational functions, extending to the field of complex analysis.[31] An extension of Bernoulli's method was used for improving linear multistep methods.[32] Another development of a modified Bernoulli's method builds a supplemental function using Taylor and Laurent series expansions to then solve for roots.[33][34] An implementation of Bernoulli's method is included with the CodeCogs open source numerical methods library.[35] The method was also programmed on the EDSAC, along with Graeffe's method, but Newton's method was preferred for being faster.[36]

Remove ads

Code

Summarize

Perspective

Bernoulli's method is implemented below in the Python programming language.

def bernoulli_method(c, eps=1e-8, max_iter=60):

"""

Bernoulli's method for finding the dominant root of a polynomial.

Parameters

----------

c : list

List of polynomial coefficients in descending order of powers.

For example, if p(x) = x^2 - x - 1, c = [1.0, -1.0, -1.0]

eps : float, optional

Convergence tolerance. Default is 1e-8.

max_iter : int, optional

Maximum number of iterations. Default is 60.

Returns

-------

float or complex

The dominant root of the polynomial if found, otherwise float('nan').

Examples

--------

>>> bernoulli_method([1.0, -1.0, -1.0]) # Golden ratio example

1.6180339901755971

>>> bernoulli_method([1.0, -3.0, 2.0]) # x^2 - 3x + 2 = (x - 2)(x - 1)

2.0000000074505806

"""

n = len(c)

x = [0.0] * (n - 2) + [1.0] # Initialize with zeros and a 1.0

q = []

for i in range(n - 1, max_iter + n):

# Apply the recurrence relation: x_n = -(a_1*x_{n-1} + ... + a_d*x_{n-d})/a_0

x.append(-sum(c[k] * x[-k + i] for k in range(1, n)) / c[0])

q.append(x[-1] / x[-2]) # Quotient of two successive x terms q_n = x_{n+1} / x_n

# Check for convergence after two quotient values

if len(q) >= 2 and abs(q[-1] - q[-2]) <= eps:

return q[-1] # Return the last computed quotient

return float("nan") # No convergence within max_iter

An efficiency improvement would be to normalize the coefficients by the leading coefficient at the beginning of the method (c = [coef / c[0] for coef in c]), eliminating the need for a division operation in the recurrence relation inside the main loop body (x.append(-sum(c[k] * x[-k + i] for k in range(1, n)))). This change does not impact the convergence order of the method. Implementing higher-order convergence would require Aitken's delta-squared process.

Remove ads

See also

References

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads