Top Qs

Timeline

Chat

Perspective

Conjugate gradient method

Mathematical optimization algorithm From Wikipedia, the free encyclopedia

Remove ads

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive-semidefinite. The conjugate gradient method is often implemented as an iterative algorithm, applicable to sparse systems that are too large to be handled by a direct implementation or other direct methods such as the Cholesky decomposition. Large sparse systems often arise when numerically solving partial differential equations or optimization problems.

The conjugate gradient method can also be used to solve unconstrained optimization problems such as energy minimization. It is commonly attributed to Magnus Hestenes and Eduard Stiefel,[1][2] who programmed it on the Z4,[3] and extensively researched it.[4][5]

The biconjugate gradient method provides a generalization to non-symmetric matrices. Various nonlinear conjugate gradient methods seek minima of nonlinear optimization problems.

Remove ads

Description of the problem addressed by conjugate gradients

Summarize

Perspective

Suppose we want to solve the system of linear equations

for the vector , where the known matrix is symmetric (i.e., ), positive-definite (i.e. for all non-zero vectors in ), and real, and is known as well. We denote the unique solution of this system by .

Remove ads

Derivation as a direct method

Summarize

Perspective

The conjugate gradient method can be derived from several different perspectives, including specialization of the conjugate direction method for optimization, and variation of the Arnoldi/Lanczos iteration for eigenvalue problems. Despite differences in their approaches, these derivations share a common topic—proving the orthogonality of the residuals and conjugacy of the search directions. These two properties are crucial to developing the well-known succinct formulation of the method.

We say that two non-zero vectors and are conjugate (with respect to ) if

Since is symmetric and positive-definite, the left-hand side defines an inner product

Two vectors are conjugate if and only if they are orthogonal with respect to this inner product. Being conjugate is a symmetric relation: if is conjugate to , then is conjugate to . Suppose that

is a set of mutually conjugate vectors with respect to , i.e. for all . Then forms a basis for , and we may express the solution of in this basis:

Left-multiplying the problem with the vector yields

and so

This gives the following method[4] for solving the equation : find a sequence of conjugate directions, and then compute the coefficients .

Remove ads

As an iterative method

Summarize

Perspective

If we choose the conjugate vectors carefully, then we may not need all of them to obtain a good approximation to the solution . So, we want to regard the conjugate gradient method as an iterative method. This also allows us to approximately solve systems where is so large that the direct method would take too much time.

We denote the initial guess for by (we can assume without loss of generality that , otherwise consider the system instead). Starting with we search for the solution and in each iteration we need a metric to tell us whether we are closer to the solution (that is unknown to us). This metric comes from the fact that the solution is also the unique minimizer of the following quadratic function

The existence of a unique minimizer is apparent as its Hessian matrix of second derivatives is symmetric positive-definite

and that the minimizer (use ) solves the initial problem follows from its first derivative

This suggests taking the first basis vector to be the negative of the gradient of at . The gradient of equals . Starting with an initial guess , this means we take . The other vectors in the basis will be conjugate to the gradient, hence the name conjugate gradient method. Note that is also the residual provided by this initial step of the algorithm.

Let be the residual at the th step:

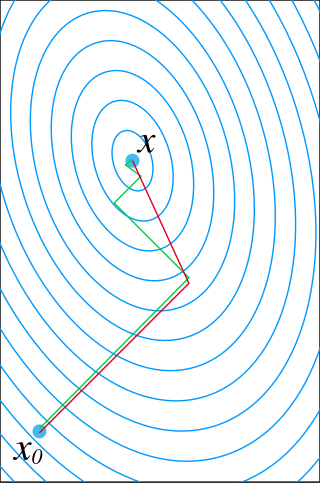

As observed above, is the negative gradient of at , so the gradient descent method would require to move in the direction rk. Here, however, we insist that the directions must be conjugate to each other. A practical way to enforce this is by requiring that the next search direction be built out of the current residual and all previous search directions. The conjugation constraint is an orthonormal-type constraint and hence the algorithm can be viewed as an example of Gram-Schmidt orthonormalization. This gives the following expression:

(see the picture at the top of the article for the effect of the conjugacy constraint on convergence). Following this direction, the next optimal location is given by

with

where the last equality follows from the definition of . The expression for can be derived if one substitutes the expression for xk+1 into f and minimizing it with respect to

The resulting algorithm

The above algorithm gives the most straightforward explanation of the conjugate gradient method. Seemingly, the algorithm as stated requires storage of all previous searching directions and residue vectors, as well as many matrix–vector multiplications, and thus can be computationally expensive. However, a closer analysis of the algorithm shows that is orthogonal to , i.e. , for . And is -orthogonal to , i.e. , for . This can be regarded that as the algorithm progresses, and span the same Krylov subspace, where form the orthogonal basis with respect to the standard inner product, and form the orthogonal basis with respect to the inner product induced by . Therefore, can be regarded as the projection of on the Krylov subspace.

That is, if the CG method starts with , then[6]The algorithm is detailed below for solving where is a real, symmetric, positive-definite matrix. The input vector can be an approximate initial solution or . It is a different formulation of the exact procedure described above.

This is the most commonly used algorithm. The same formula for is also used in the Fletcher–Reeves nonlinear conjugate gradient method.

Restarts

We note that is computed by the gradient descent method applied to . Setting would similarly make computed by the gradient descent method from , i.e., can be used as a simple implementation of a restart of the conjugate gradient iterations.[4] Restarts could slow down convergence, but may improve stability if the conjugate gradient method misbehaves, e.g., due to round-off error.

Explicit residual calculation

The formulas and , which both hold in exact arithmetic, make the formulas and mathematically equivalent. The former is used in the algorithm to avoid an extra multiplication by since the vector is already computed to evaluate . The latter may be more accurate, substituting the explicit calculation for the implicit one by the recursion subject to round-off error accumulation, and is thus recommended for an occasional evaluation.[7]

A norm of the residual is typically used for stopping criteria. The norm of the explicit residual provides a guaranteed level of accuracy both in exact arithmetic and in the presence of the rounding errors, where convergence naturally stagnates. In contrast, the implicit residual is known to keep getting smaller in amplitude well below the level of rounding errors and thus cannot be used to determine the stagnation of convergence.

Computation of alpha and beta

In the algorithm, is chosen such that is orthogonal to . The denominator is simplified from

since . The is chosen such that is conjugate to . Initially, is

using

and equivalently

the numerator of is rewritten as

because and are orthogonal by design. The denominator is rewritten as

using that the search directions are conjugated and again that the residuals are orthogonal. This gives the in the algorithm after cancelling .

Example code in Julia (programming language)

using LinearAlgebra

"""

x = conjugate_gradient(A, b, x0 = zero(b); atol=length(b)*eps(norm(b))

Return the solution to `A * x = b` using the conjugate gradient method.

`A` must be a positive definite matrix or other linear operator.

`x0` is the initial guess for the solution (default is the zero vector).

`atol` is the absolute tolerance on the magnitude of the residual `b - A * x`

for convergence (default is machine epsilon).

Returns the approximate solution vector `x`.

"""

function conjugate_gradient(

A, b::AbstractVector, x0::AbstractVector = zero(b); atol=length(b)*eps(norm(b))

)

x = copy(x0) # initialize the solution

r = b - A * x0 # initial residual

p = copy(r) # initial search direction

r²old = r' * r # squared norm of residual

k = 0

while r²old > atol^2 # iterate until convergence

Ap = A * p # search direction

α = r²old / (p' * Ap) # step size

@. x += α * p # update solution

# Update residual:

if (k + 1) % 16 == 0 # every 16 iterations, recompute residual from scratch

r .= b .- A * x # to avoid accumulation of numerical errors

else

@. r -= α * Ap # use the updating formula that saves one matrix-vector product

end

r²new = r' * r

@. p = r + (r²new / r²old) * p # update search direction

r²old = r²new # update squared residual norm

k += 1

end

return x

end

Example code in MATLAB

function x = conjugate_gradient(A, b, x0, tol)

% Return the solution to `A * x = b` using the conjugate gradient method.

% Reminder: A should be symmetric and positive definite.

if nargin < 4

tol = eps;

end

r = b - A * x0;

p = r;

rsold = r' * r;

x = x0;

while sqrt(rsold) > tol

Ap = A * p;

alpha = rsold / (p' * Ap);

x = x + alpha * p;

r = r - alpha * Ap;

rsnew = r' * r;

p = r + (rsnew / rsold) * p;

rsold = rsnew;

end

end

Numerical example

Consider the linear system Ax = b given by

we will perform two steps of the conjugate gradient method beginning with the initial guess

in order to find an approximate solution to the system.

Solution

For reference, the exact solution is

Our first step is to calculate the residual vector r0 associated with x0. This residual is computed from the formula r0 = b - Ax0, and in our case is equal to

Since this is the first iteration, we will use the residual vector r0 as our initial search direction p0; the method of selecting pk will change in further iterations.

We now compute the scalar α0 using the relationship

We can now compute x1 using the formula

This result completes the first iteration, the result being an "improved" approximate solution to the system, x1. We may now move on and compute the next residual vector r1 using the formula

Our next step in the process is to compute the scalar β0 that will eventually be used to determine the next search direction p1.

Now, using this scalar β0, we can compute the next search direction p1 using the relationship

We now compute the scalar α1 using our newly acquired p1 using the same method as that used for α0.

Finally, we find x2 using the same method as that used to find x1.

The result, x2, is a "better" approximation to the system's solution than x1 and x0. If exact arithmetic were to be used in this example instead of limited-precision, then the exact solution would theoretically have been reached after n = 2 iterations (n being the order of the system).

Remove ads

Finite Termination Property

Summarize

Perspective

Under exact arithmetic, the number of iterations required is no more than the order of the matrix. This behavior is known as the finite termination property of the conjugate gradient method. It refers to the method's ability to reach the exact solution of a linear system in a finite number of steps—at most equal to the dimension of the system—when exact arithmetic is used. This property arises from the fact that, at each iteration, the method generates a residual vector that is orthogonal to all previous residuals. These residuals form a mutually orthogonal set.

In an n-dimensional space, it is impossible to construct more than n linearly independent and mutually orthogonal vectors unless one of them is the zero vector. Therefore, once a zero residual appears, the method has reached the solution and must terminate. This ensures that the conjugate gradient method converges in at most n steps.

To demonstrate this, consider the system:

We start from an initial guess . Since is symmetric positive-definite and the system is 2-dimensional, the conjugate gradient method should find the exact solution in no more than 2 steps. The following MATLAB code demonstrates this behavior:

A = [3, -2; -2, 4];

x_true = [1; 1];

b = A * x_true;

x = [1; 2]; % initial guess

r = b - A * x;

p = r;

for k = 1:2

Ap = A * p;

alpha = (r' * r) / (p' * Ap);

x = x + alpha * p;

r_new = r - alpha * Ap;

beta = (r_new' * r_new) / (r' * r);

p = r_new + beta * p;

r = r_new;

end

disp('Exact solution:');

disp(x);

The output confirms that the method reaches after two iterations, consistent with the theoretical prediction. This example illustrates how the conjugate gradient method behaves as a direct method under idealized conditions.

Application to Sparse Systems

The finite termination property also has practical implications in solving large sparse systems, which frequently arise in scientific and engineering applications. For instance, discretizing the two-dimensional Laplace equation using finite differences on a uniform grid leads to a sparse linear system , where is symmetric and positive definite.

Using a interior grid yields a system, and the coefficient matrix has a five-point stencil pattern. Each row of contains at most five nonzero entries corresponding to the central point and its immediate neighbors. For example, the matrix generated from such a grid may look like:

Although the system dimension is 25, the conjugate gradient method is theoretically guaranteed to terminate in at most 25 iterations under exact arithmetic. In practice, convergence often occurs in far fewer steps due to the matrix's spectral properties. This efficiency makes CGM particularly attractive for solving large-scale systems arising from partial differential equations, such as those found in heat conduction, fluid dynamics, and electrostatics.

Remove ads

Convergence properties

Summarize

Perspective

The conjugate gradient method can theoretically be viewed as a direct method, as in the absence of round-off error it produces the exact solution after a finite number of iterations, which is not larger than the size of the matrix. In practice, the exact solution is never obtained since the conjugate gradient method is unstable with respect to even small perturbations, e.g., most directions are not in practice conjugate, due to a degenerative nature of generating the Krylov subspaces.

As an iterative method, the conjugate gradient method monotonically (in the energy norm) improves approximations to the exact solution and may reach the required tolerance after a relatively small (compared to the problem size) number of iterations. The improvement is typically linear and its speed is determined by the condition number of the system matrix : the larger is, the slower the improvement.[8]

However, an interesting case appears when the eigenvalues are spaced logarithmically for a large symmetric matrix. For example, let where is a random orthogonal matrix and is a diagonal matrix with eigenvalues ranging from to , spaced logarithmically. Despite the finite termination property of CGM, where the exact solution should theoretically be reached in at most steps, the method may exhibit stagnation in convergence. In such a scenario, even after many more iterations—e.g., ten times the matrix size—the error may only decrease modestly (e.g., to ). Moreover, the iterative error may oscillate significantly, making it unreliable as a stopping condition. This poor convergence is not explained by the condition number alone (e.g., ), but rather by the eigenvalue distribution itself. When the eigenvalues are more evenly spaced or randomly distributed, such convergence issues are typically absent, highlighting that CGM performance depends not only on but also on how the eigenvalues are distributed.[9]

If is large, preconditioning is commonly used to replace the original system with such that is smaller than , see below.

Convergence theorem

Define a subset of polynomials as

where is the set of polynomials of maximal degree .

Let be the iterative approximations of the exact solution , and define the errors as . Now, the rate of convergence can be approximated as [4][10]

where denotes the spectrum, and denotes the condition number.

This shows iterations suffices to reduce the error to for any .

Note, the important limit when tends to

This limit shows a faster convergence rate compared to the iterative methods of Jacobi or Gauss–Seidel which scale as .

No round-off error is assumed in the convergence theorem, but the convergence bound is commonly valid in practice as theoretically explained[5] by Anne Greenbaum.

Practical convergence

If initialized randomly, the first stage of iterations is often the fastest, as the error is eliminated within the Krylov subspace that initially reflects a smaller effective condition number. The second stage of convergence is typically well defined by the theoretical convergence bound with , but may be super-linear, depending on a distribution of the spectrum of the matrix and the spectral distribution of the error.[5] In the last stage, the smallest attainable accuracy is reached and the convergence stalls or the method may even start diverging. In typical scientific computing applications in double-precision floating-point format for matrices of large sizes, the conjugate gradient method uses a stopping criterion with a tolerance that terminates the iterations during the first or second stage.

Remove ads

The preconditioned conjugate gradient method

Summarize

Perspective

In most cases, preconditioning is necessary to ensure fast convergence of the conjugate gradient method. If is symmetric positive-definite and has a better condition number than a preconditioned conjugate gradient method can be used. It takes the following form:[11]

- repeat

- if rk+1 is sufficiently small then exit loop end if

- end repeat

- The result is xk+1

The above formulation is equivalent to applying the regular conjugate gradient method to the preconditioned system[12]

where

The Cholesky decomposition of the preconditioner must be used to keep the symmetry (and positive definiteness) of the system. However, this decomposition does not need to be computed, and it is sufficient to know . It can be shown that has the same spectrum as .

The preconditioner matrix has to be symmetric positive-definite and fixed, i.e., cannot change from iteration to iteration. If any of these assumptions on the preconditioner is violated, the behavior of the preconditioned conjugate gradient method may become unpredictable.

An example of a commonly used preconditioner is the incomplete Cholesky factorization.[13]

Using the preconditioner in practice

It is important to keep in mind that we don't want to invert the matrix explicitly in order to get for use in the process, since inverting would take more time/computational resources than solving the conjugate gradient algorithm itself. As an example, let's say that we are using a preconditioner coming from incomplete Cholesky factorization. The resulting matrix is the lower triangular matrix , and the preconditioner matrix is:

Then we have to solve:

But:

Then:

Let's take an intermediary vector :

Since and and known, and is lower triangular, solving for is easy and computationally cheap by using forward substitution. Then, we substitute in the original equation:

Since and are known, and is upper triangular, solving for is easy and computationally cheap by using backward substitution.

Using this method, there is no need to invert or explicitly at all, and we still obtain .

Remove ads

The flexible preconditioned conjugate gradient method

Summarize

Perspective

In numerically challenging applications, sophisticated preconditioners are used, which may lead to variable preconditioning, changing between iterations. Even if the preconditioner is symmetric positive-definite on every iteration, the fact that it may change makes the arguments above invalid, and in practical tests leads to a significant slow down of the convergence of the algorithm presented above. Using the Polak–Ribière formula

instead of the Fletcher–Reeves formula

may dramatically improve the convergence in this case.[14] This version of the preconditioned conjugate gradient method can be called[15] flexible, as it allows for variable preconditioning. The flexible version is also shown[16] to be robust even if the preconditioner is not symmetric positive definite (SPD).

The implementation of the flexible version requires storing an extra vector. For a fixed SPD preconditioner, so both formulas for βk are equivalent in exact arithmetic, i.e., without the round-off error.

The mathematical explanation of the better convergence behavior of the method with the Polak–Ribière formula is that the method is locally optimal in this case, in particular, it does not converge slower than the locally optimal steepest descent method.[17]

Remove ads

Vs. the locally optimal steepest descent method

In both the original and the preconditioned conjugate gradient methods one only needs to set in order to make them locally optimal, using the line search, steepest descent methods. With this substitution, vectors p are always the same as vectors z, so there is no need to store vectors p. Thus, every iteration of these steepest descent methods is a bit cheaper compared to that for the conjugate gradient methods. However, the latter converge faster, unless a (highly) variable and/or non-SPD preconditioner is used, see above.

Remove ads

Conjugate gradient method as optimal feedback controller for double integrator

The conjugate gradient method can also be derived using optimal control theory.[18] In this approach, the conjugate gradient method falls out as an optimal feedback controller, for the double integrator system, The quantities and are variable feedback gains.[18]

Conjugate gradient on the normal equations

The conjugate gradient method can be applied to an arbitrary n-by-m matrix by applying it to normal equations ATA and right-hand side vector ATb, since ATA is a symmetric positive-semidefinite matrix for any A. The result is conjugate gradient on the normal equations (CGN or CGNR).

- ATAx = ATb

As an iterative method, it is not necessary to form ATA explicitly in memory but only to perform the matrix–vector and transpose matrix–vector multiplications. Therefore, CGNR is particularly useful when A is a sparse matrix since these operations are usually extremely efficient. However the downside of forming the normal equations is that the condition number κ(ATA) is equal to κ2(A) and so the rate of convergence of CGNR may be slow and the quality of the approximate solution may be sensitive to roundoff errors. Finding a good preconditioner is often an important part of using the CGNR method.

Several algorithms have been proposed (e.g., CGLS, LSQR). The LSQR algorithm purportedly has the best numerical stability when A is ill-conditioned, i.e., A has a large condition number.

Remove ads

Conjugate gradient method for complex Hermitian matrices

The conjugate gradient method with a trivial modification is extendable to solving, given complex-valued matrix A and vector b, the system of linear equations for the complex-valued vector x, where A is Hermitian (i.e., A' = A) and positive-definite matrix, and the symbol ' denotes the conjugate transpose. The trivial modification is simply substituting the conjugate transpose for the real transpose everywhere.

Remove ads

Advantages and disadvantages

Summarize

Perspective

The advantages and disadvantages of the conjugate gradient methods are summarized in the lecture notes by Nemirovsky and BenTal.[19]: Sec.7.3

A pathological example

This example is from [20] Let , and defineSince is invertible, there exists a unique solution to . Solving it by conjugate gradient descent gives us rather bad convergence:In words, during the CG process, the error grows exponentially, until it suddenly becomes zero as the unique solution is found.

See also

References

Further reading

External links

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads