Top Qs

Timeline

Chat

Perspective

Magnitude (mathematics)

Property determining comparison and ordering From Wikipedia, the free encyclopedia

Remove ads

In mathematics, the magnitude or size of a mathematical object is a property which determines whether the object is larger or smaller than other objects of the same kind. More formally, an object's magnitude is the displayed result of an ordering (or ranking) of the class of objects to which it belongs. Magnitude as a concept dates to Ancient Greece and has been applied as a measure of distance from one object to another. For numbers, the absolute value of a number is commonly applied as the measure of units between a number and zero.

In vector spaces, the Euclidean norm is a measure of magnitude used to define a distance between two points in space. In physics, magnitude can be defined as quantity or distance. An order of magnitude is typically defined as a unit of distance between one number and another's numerical places on the decimal scale.

Remove ads

History

Ancient Greeks distinguished between several types of magnitude,[1] including:

- Positive fractions

- Line segments (ordered by length)

- Plane figures (ordered by area)

- Solids (ordered by volume)

- Angles (ordered by angular magnitude)

They proved that the first two could not be the same, or even isomorphic systems of magnitude.[2] They did not consider negative magnitudes to be meaningful, and magnitude is still primarily used in contexts in which zero is either the smallest size or less than all possible sizes.

Remove ads

Numbers

Summarize

Perspective

The magnitude of any number is usually called its absolute value or modulus, denoted by .[3]

Real numbers

The absolute value of a real number r is defined by:[4]

Absolute value may also be thought of as the number's distance from zero on the real number line. For example, the absolute value of both 70 and −70 is 70.

Complex numbers

A complex number z may be viewed as the position of a point P in a 2-dimensional space, called the complex plane. The absolute value (or modulus) of z may be thought of as the distance of P from the origin of that space. The formula for the absolute value of z = a + bi is similar to that for the Euclidean norm of a vector in a 2-dimensional Euclidean space:[5]

where the real numbers a and b are the real part and the imaginary part of z, respectively. For instance, the modulus of −3 + 4i is . Alternatively, the magnitude of a complex number z may be defined as the square root of the product of itself and its complex conjugate, , where for any complex number , its complex conjugate is .

(where ).

Remove ads

Vector spaces

Summarize

Perspective

Euclidean vector space

A Euclidean vector represents the position of a point P in a Euclidean space. Geometrically, it can be described as an arrow from the origin of the space (vector tail) to that point (vector tip). Mathematically, a vector x in an n-dimensional Euclidean space can be defined as an ordered list of n real numbers (the Cartesian coordinates of P): x = [x1, x2, ..., xn]. Its magnitude or length, denoted by ,[6] is most commonly defined as its Euclidean norm (or Euclidean length):[7]

For instance, in a 3-dimensional space, the magnitude of [3, 4, 12] is 13 because This is equivalent to the square root of the dot product of the vector with itself:

The Euclidean norm of a vector is just a special case of Euclidean distance: the distance between its tail and its tip. Two similar notations are used for the Euclidean norm of a vector x:

A disadvantage of the second notation is that it can also be used to denote the absolute value of scalars and the determinants of matrices, which introduces an element of ambiguity.

Normed vector spaces

By definition, all Euclidean vectors have a magnitude (see above). However, a vector in an abstract vector space does not possess a magnitude.

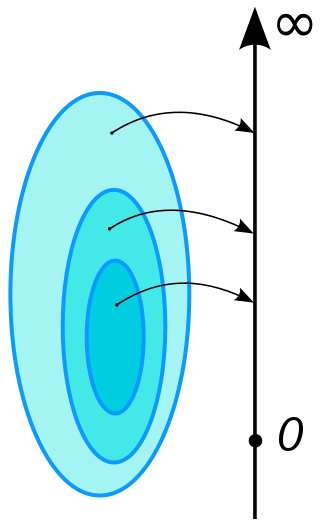

A vector space endowed with a norm, such as the Euclidean space, is called a normed vector space.[8] The norm of a vector v in a normed vector space can be considered to be the magnitude of v.

Pseudo-Euclidean space

In a pseudo-Euclidean space, the magnitude of a vector is the value of the quadratic form for that vector.

Remove ads

Logarithmic magnitudes

When comparing magnitudes, a logarithmic scale is often used. Examples include the loudness of a sound (measured in decibels), the brightness of a star, and the Richter scale of earthquake intensity. Logarithmic magnitudes can be negative. In the natural sciences, a logarithmic magnitude is typically referred to as a level.

Order of magnitude

Orders of magnitude denote differences in numeric quantities, usually measurements, by a factor of 10—that is, a difference of one digit in the location of the decimal point.

Other mathematical measures

Summarize

Perspective

In mathematics, the concept of a measure is a generalization and formalization of geometrical measures (length, area, volume) and other common notions, such as magnitude, mass, and probability of events. These seemingly distinct concepts have many similarities and can often be treated together in a single mathematical context. Measures are foundational in probability theory, integration theory, and can be generalized to assume negative values, as with electrical charge. Far-reaching generalizations (such as spectral measures and projection-valued measures) of measure are widely used in quantum physics and physics in general.

The intuition behind this concept dates back to Ancient Greece, when Archimedes tried to calculate the area of a circle.[9][10] But it was not until the late 19th and early 20th centuries that measure theory became a branch of mathematics. The foundations of modern measure theory were laid in the works of Émile Borel, Henri Lebesgue, Nikolai Luzin, Johann Radon, Constantin Carathéodory, and Maurice Fréchet, among others. According to Thomas W. Hawkins Jr., "It was primarily through the theory of multiple integrals and, in particular the work of Camille Jordan that the importance of the notion of measurability was first recognized."[11]Remove ads

See also

References

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads