Top Qs

Timeline

Chat

Perspective

Minifloat

Floating-point values coded as few bits From Wikipedia, the free encyclopedia

Remove ads

In computing, minifloats are floating-point values represented with very few bits. This reduced precision makes them ill-suited for general-purpose numerical calculations, but they are useful for special purposes such as:

- Computer graphics, where human perception of color and light levels has low precision.[1] The 16-bit half-precision format is very popular.

- Machine learning, which can be relatively insensitive to numeric precision. 16-bit, 8-bit, and even 4-bit floats are increasingly being used.[2]

Additionally, they are frequently encountered as a pedagogical tool in computer-science courses to demonstrate the properties and structures of floating-point arithmetic and IEEE 754 numbers.

Depending on context minifloat may mean any size less than 32, any size less or equal to 16, or any size less than 16. The term microfloat may mean any size less or equal to 8.[3]

Remove ads

Notation

This page uses the notation (S.E.M) to describe a mini float:

- S is the length of the sign field (0 or 1).

- E is the length of the exponent field.

- M is the length of the mantissa (significand) field.

Minifloats can be designed following the principles of the IEEE 754 standard. Almost all use the smallest exponent for subnormal and normal numbers. Many use the largest exponent for infinity and NaN, indicated by (special exponent) SE = 1. Some mini floats use this exponent value normally, in which case SE = 0.

The exponent bias B = 2E − 1 − SE. This value insures that all representable numbers have a representable reciprocal.

The notation can be converted to a (B,P,L,U) format as (2, M + 1, SE − 2E − 1 + 1, 2E − 1 − 1).

A common notation used in the field of machine learning is FPn EeMm, where the lowercase letters are replaced by numbers. For example, FP8 E4M3 is the same as (1.4.3).

Remove ads

Usage

Summarize

Perspective

Many situations that call for floating-point numbers do not actually require a lot of precision. This is typical for high dynamic range graphics and image processing. This is also typical for larger neural networks, a property that has been exploited since the 2020s to allow increasingly large language models to be trained and deployed. The more "general-purpose" example is the fp16 (1.5.10) in IEEE 754-2008, called "half-precision" (as opposed to 32-bit single and 64-bit double precision).

The bfloat16 (1.8.7) format is the first 16 bits of a standard single-precision number and was often used in image processing and machine learning before hardware support was added for other formats.

Graphics

The Radeon R300 and R420 GPUs used an "fp24" floating-point format (1.7.16).[4] "Full Precision" in Direct3D 9.0 is a proprietary 24-bit floating-point format. Microsoft's D3D9 (Shader Model 2.0) graphics API initially supported both FP24 (as in ATI's R300 chip) and FP32 (as in Nvidia's NV30 chip) as "Full Precision", as well as FP16 as "Partial Precision" for vertex and pixel shader calculations performed by the graphics hardware.

In 2016 Khronos defined 10-bit (0.5.5) and 11-bit (0.5.6) unsigned formats for use with Vulkan.[5][6] These can be converted from positive half-precision by truncating the sign and trailing digits.

Microcontroller

Minifloats are also commonly used in embedded devices such as microcontrollers where floating-point will need to be emulated in software. To speed up the computation, the mantissa typically occupies exactly half of the bits, so the register boundary automatically addresses the parts without shifting (ie (1.3.4) on 4-bit devices).[citation needed]

Machine learning

In 2022 NVidia and others announced support for "fp8" format (1.5.2, E5M2). These can be converted from half-precision by truncating the trailing digits. This format supports special values such as NaN and infinity. They also announced a format without Infinity and only two (positive and negative) representations for NaN, the FP8 E4M3 (1.4.3): after all, special values are unnecessary in the inference (forward-running, as opposed to training via backpropagation) of neural networks.[2] The formats have been made into an industrial standard called OCP-FP8.[7] Further compression such as FP4 E2M1 (1.2.1) has also proven fruitful.[8]

Since 2023, IEEE SA Working Group P3109 is working on a standard for minifloats optimized for machine learning by systematizing current practice. Interim Report version 3.0 (August 2025) defines a family of many formats under the systematic name "binaryKpP[s/u][e/f]", where K is the total bit length, P is the number of mantissa bits, s/u (signed/unsigned) refers to whether a sign bit is present, and e/f (extended/finite) refers to whether infinity is included. By convention, s and e may be omitted. To save space for more numbers, there is no such thing as a "negative zero", and there is only one representation for NaN; for signed formats, the NaN can thus use the bit-pattern of what would've been negative zero. For example, the FP4-E2M1 format can be approximated as the following in P3109:[9]

(For binary4p2se, ±6 are replaced by ±Infinity.)

A downside of very small minifloats is that they have very little representable dynamic range. To fix this problem, the machine learning industry has invented "microscaling formats" (MX), a kind of block floating-point. In a MX format, a group of 32 minifloats share an additional scaling factor represented by an "E8M0" minifloat (which is able to represent powers of 2 between 2-127 and 2127). MX has been defined for FP8-E5M2, FP8-E4M3, FP6-E3M2 (1.3.2), FP6-E2M3 (1.2.3), and FP4-E2M1.[10]

Remove ads

Examples

Summarize

Perspective

8-bit (1.4.3)

A minifloat in 1 byte (8 bit) with 1 sign bit, 4 exponent bits and 3 significand bits (1.4.3) is demonstrated here. The exponent bias is defined as 7 to center the values around 1 to match other IEEE 754 floats[11][12] so (for most values) the actual multiplier for exponent x is 2x−7. All IEEE 754 principles should be valid.[13] This form is quite common for instruction.[citation needed]

Zero is represented as zero exponent with a zero mantissa. The zero exponent means zero is a subnormal number with a leading "0." prefix, and with the zero mantissa all bits after the decimal point are zero, meaning this value is interpreted as . Floating point numbers use a signed zero, so is also available and is equal to positive .

0 0000 000 = 0 1 0000 000 = −0

For the lowest exponent the significand is extended with "0." and the exponent value is treated as 1 higher like the least normalized number:

0 0000 001 = 0.0012 × 21 - 7 = 0.125 × 2−6 = 0.001953125 (least subnormal number) ... 0 0000 111 = 0.1112 × 21 - 7 = 0.875 × 2−6 = 0.013671875 (greatest subnormal number)

All other exponents the significand is extended with "1.":

0 0001 000 = 1.0002 × 21 - 7 = 1 × 2−6 = 0.015625 (least normalized number) 0 0001 001 = 1.0012 × 21 - 7 = 1.125 × 2−6 = 0.017578125 ... 0 0111 000 = 1.0002 × 27 - 7 = 1 × 20 = 1 0 0111 001 = 1.0012 × 27 - 7 = 1.125 × 20 = 1.125 (least value above 1) ... 0 1110 000 = 1.0002 × 214 - 7 = 1.000 × 27 = 128 0 1110 001 = 1.0012 × 214 - 7 = 1.125 × 27 = 144 ... 0 1110 110 = 1.1102 × 214 - 7 = 1.750 × 27 = 224 0 1110 111 = 1.1112 × 214 - 7 = 1.875 × 27 = 240 (greatest normalized number)

Infinity values have the highest exponent, with the mantissa set to zero. The sign bit can be either positive or negative.

0 1111 000 = +infinity 1 1111 000 = −infinity

NaN values have the highest exponent, with the mantissa non-zero.

s 1111 mmm = NaN (if mmm ≠ 000)

This is a chart of all possible values for this example 8-bit float:

There are only 242 different non-NaN values (if +0 and −0 are regarded as different), because 14 of the bit patterns represent NaNs.

To convert to/from 8-bit floats in programming languages, libraries or functions are usually required, since this format is not standardized. For example, here is an example implementation in C++ (public domain).

8-bit (1.4.3) with B = −2

At these small sizes other bias values may be interesting, for instance a bias of −2 will make the numbers 0–16 have the same bit representation as the integers 0–16, with the loss that no non-integer values can be represented.

0 0000 000 = 0.0002 × 21 - (-2) = 0.0 × 23 = 0 (subnormal number) 0 0000 001 = 0.0012 × 21 - (-2) = 0.125 × 23 = 1 (subnormal number) 0 0000 111 = 0.1112 × 21 - (-2) = 0.875 × 23 = 7 (subnormal number) 0 0001 000 = 1.0002 × 21 - (-2) = 1.000 × 23 = 8 (normalized number) 0 0001 111 = 1.1112 × 21 - (-2) = 1.875 × 23 = 15 (normalized number) 0 0010 000 = 1.0002 × 22 - (-2) = 1.000 × 24 = 16 (normalized number)

8-bit (1.3.4)

Any bit allocation is possible. A format could choose to give more of the bits to the exponent if they need more dynamic range with less precision, or give more of the bits to the significand if they need more precision with less dynamic range. At the extreme, it is possible to allocate all bits to the exponent (1.7.0), or all but one of the bits to the significand (1.1.6), leaving the exponent with only one bit. The exponent must be given at least one bit, or else it no longer makes sense as a float, it just becomes a signed number.

Here is a chart of all possible values for (1.3.4). M ≥ 2E − 1 ensures that the precision remains at least 0.5 throughout the entire range.[14]

Tables like the above can be generated for any combination of SEMB (sign, exponent, mantissa/significand, and bias) values using a script in Python or in GDScript.

6-bit (1.3.2)

With only 64 values, it is possible to plot all the values in a diagram, which can be instructive.

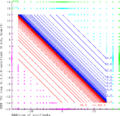

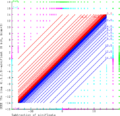

These graphics demonstrates math of two 6-bit (1.3.2)-minifloats, following the rules of IEEE 754 exactly. Green X's are NaN results, Cyan X's are +Infinity results, Magenta X's are −Infinity results. The range of the finite results is filled with curves joining equal values, blue for positive and red for negative.

- Addition

- Subtraction

- Multiplication

- Division

4 bit (1.2.1)

The smallest possible float size that follows all IEEE principles, including normalized numbers, subnormal numbers, signed zero, signed infinity, and multiple NaN values, is a 4-bit float with 1-bit sign, 2-bit exponent, and 1-bit mantissa.[15]

This example 4-bit float is very similar to the FP4-E2M1 format used by Nvidia, and the IEEE P3109 binary4p2s* formats, as described above, except for a different allocation of the special Infinity and NaN values. This example uses a traditional allocation following the same principles as other float sizes. Floats intended for application-specific use cases instead of general-purpose interoperability may prefer to use those bit patterns for finite numbers, depending on the needs of the application.

3-bit (1.1.1)

If normalized numbers are not required, the size can be reduced to 3-bit by reducing the exponent down to 1.

2-bit (0.2.1) and (0.1.1)

In situations where the sign bit can be excluded, each of the above examples can be reduced by 1 bit further, keeping only the first row of the above tables. A 2-bit float with 1-bit exponent and 1-bit mantissa would only have 0, 1, Inf, NaN values.

1-bit (0.1.0)

Removing the mantissa would allow only two values: 0 and Inf. Removing the exponent does not work, the above formulae produce 0 and sqrt(2)/2. The exponent must be at least 1 bit or else it no longer makes sense as a float (it would just be a signed number).

Remove ads

See also

References

External links

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads