Top Qs

Timeline

Chat

Perspective

TensorFloat-32

Numbering format in Nvidia hardware From Wikipedia, the free encyclopedia

Remove ads

TensorFloat-32 (TF32) is a numeric floating point format designed for Tensor Core running on certain Nvidia GPUs. It combines the 8-bit exponent size of IEEE single precision with the 10-bit mantissa size of half precision.

This article needs additional citations for verification. (April 2025) |

Format

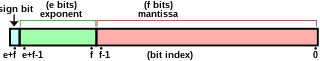

The binary format is:

- 1 sign bit

- 8 exponent bits

- 10 significand bits (also called mantissa, or precision bits)

The 19-significant-bit format fits within a double word (32 bits), and while it lacks precision compared with a normal 32-bit IEEE 754 floating-point number, provides much faster computation, up to 8 times on a A100 (compared to a V100 using FP32).[1]

Stored in the same space as FP32, it is not really a distinct storage format, but a specification for reduced-precision FP32 multiply–accumulate operations. FP32 inputs are rounded to TF32, multiplied to produce a 21-bit product (including the implcit msbit, this is an 11×11→22-bit multiply), and summed into a standard FP32 accumulator.[2]

Remove ads

See also

References

Wikiwand - on

Seamless Wikipedia browsing. On steroids.

Remove ads